AI for a Cultural History

Tracing AI’s Evolution: From Myth to Modern Technology

This reflection is part of the “CulturIA” project, funded by the French National Research Agency, which studies the cultural history of artificial intelligence (AI) from its “prehistory” to contemporary developments. AI can be defined as the set of mathematical methods and computer technologies designed to solve problems ordinarily dealt with by the human mind, from accompanying human tasks (digital tools) to replacing humans (the horizon of a “general AI” capable of producing reasoning).

We are at an intermediate stage of this technological development, which concretely began in the 1950s but has been fantasized about for millennia: AI is already outperforming humans in many specialized tasks (image and document classification, translation, trend analysis, and prediction, sound, text and image production, resolution, elaborate games). AI has also demonstrated its ability to plausibly simulate certain interactions and, since 2014, conversational agents have managed to pass the Turing test imagined by the mathematician in 1950 (succeeding in deceiving a human being about the identity of the person exchanging with you in a room).

The GPT-3 language model (the so-called “Large Language Model”) has 175 billion parameters and is capable of having a plausible conversation on complex subjects, suggesting elaborate answers, recommending books, imitating great writers, and translating from one language to another. But GPT3 only makes predictions (transfer learning) from learned texts; it is not capable of reasoning by analogy and has no representation of the world: in the sense of John Searle, who criticized the Turing test in a famous article with the metaphor of the “Chinese room”, it manipulates symbols but does not think.

Machine Learning Milestones: From Alphago to GANs

The latest developments in AI are seeing advances in machine learning and the emergence of unsupervised deep learning using networks that mimic human neurons, capable of working on raw, unprepared, and unstructured data, without needing to be told the discriminating features of the data, and also capable of classifying them on its own, but based on huge quantities of data. One famous example would be Alphago, which learned Go by playing against itself, or of GANs (Generative Adversarial Networks), which compete under the watchful eye of a third-party algorithm that evaluates their progress.

These recent developments, and the emergence of industrial applications, have fuelled intense debate that questions representations of the boundaries between the living and the machine, interrogates notions of autonomy and originality, modifies our relationship to memory, and reformulates our philosophical, ethical and aesthetic categories by questioning the very idea of culture. But rather than restricting AI to moral and political dilemmas oversimplifying these issues, it's time to make AI a rich cultural object with its own history, particularly in its cultural aspect. AI is actually a multidisciplinary scientific and intellectual phenomenon and a global cultural object, including the history of philosophy as much as the history of mathematics or biology.

It encompasses a wide range of knowledge, from the philosophy of reasoning to the cognitive sciences (neural networks have provided a model for a discipline that often attempts to reproduce human mechanisms, or at least adopts metaphors such as “memory” or “attention” networks). Some advances are technological (Moore's Law), and others are the result of slow progress in mathematics (linear algebra), across a vast spectrum of disciplinary fields.

An Archaeology of AI: The Layers of History

The cultural history of ideas needs to identify both breaking points (the invention of the famous perceptron based on feedback theory) and “gentle slopes” (the progress of automata), “epistemological thresholds” (such as the formal logic developed by the Vienna Circle) and ‘architectural units’ (the era of cybernetics, that of deep learning) to borrow the vocabulary of Foucauldian archaeology, an intellectual model that invites us to think of AI before AI, before its invention in the 1950s.

This archaeology of AI cannot be reduced to a linear model: it has often advanced in fits and starts. There are many projects that remained virtual for lack of technology (Babbage's machine, a programmable calculating machine imagined in 1834 but which never worked), objects forgotten for millennia (elaborate Greek automata), inventions with no future (the Antikythera mechanism, the first ancient analog calculator to calculate astronomical positions, or Wilhelm Schickard's machine, which preceded Pascal's invention, the Pascaline, the first modern calculating machine, by twenty years), as well as frequent moments of suspension (the AI winter, that slowdown in research in the 70s), overweening anticipation and cheating (Vaucanson's mechanical duck supposed to simulate digestion, Johann Wolfgang von Kempelen's hoax mechanical Turk from the late 18th century that impressed the world).

Despite the work of Pratt and Nilsson, we need only refer to the excellent introductory chapters of Norvig and Russell's reference textbook, Artificial Intelligence: A Modern Approach. These works, which focus on the birth of AI within cybernetics or its strictly scientific history, do not allow us to measure its current multi-disciplinary extensions, nor to make an intellectual history of a project with multiple scientific dimensions and cultural resonances.

The recent synthesis from Stanford University looks to the future rather than the past. While some AI creators have achieved celebrity status, little work has been done with AI developers and entrepreneurs themselves, and never with a socio-anthropological perspective. Similarly, although its actors have often carried a public discourse, they have rarely been the subject of dedicated investigations, except from a critical or political philosophy perspective.

Visualizing AI: Cultural Representations in Fiction and Art

The cultural dimension of AI is present in the very important Global AI Narrative project, but this intends to offer a panoramic international approach, producing surveys rather than the integrated historical narrative that is the heart of our project. From the Antikythera astronomical calculator to computers, via the Pascaline, Babbage's analytical engine, William Stanley Jevons' logic piano or Torres-Quevedo's chess player, the prehistory of AI is embodied in concrete, material objects. It is also accompanied by images and fictions from the Golem to Blade Runner, from the legend of the Bronze Giants of Talos to Terminator. These visual dimensions have given rise to works that are, for the moment, sketchy, such as Pickover's project for an illustrated history of AI, or focused solely on the particular historical era of the Enlightenment and the theme of automata.

The question of fictional representations of AI remains fragmented in reference essays or encyclopedias of science fiction and remains marked by a thematic approach that needs to be overcome by joint work between historians of science and culture. On the contrary, we need to emphasize the close links between artistic productions and technological programs, including in the AI industries: art not only reflects technologies, but also enables us to take the measure of their achievements.

Thus, it was with the creation of images that we became aware of the power of Generative Adversarial Networks, and with the production of plausible stories or pastiches of great authors, the GPT-3 linguistic model demonstrated its powers. Fiction has been used to anticipate the multiple consequences of the technological transformations brought about by AI. Yan le Cun, one of the inventors of the convolutional neural networks at the root of modern deep learning, recounts the influence on him of the science-fiction computer HAL from 2001: A Space Odyssey, a seminal experience for the young man he was and a fictional mediation for many AI researchers - and without needing to point out that Ada Lovelace was Lord Byron's daughter, how can we forget that Norbert Wiener and Marvin Minsky, two of the founding fathers of artificial intelligence, wrote novels? The history of AI cannot therefore be abstract and purely conceptual: AI is a set of technologies inseparable from dreams and fantasies, and its applications are dependent on situated values and ideologies.

Humanizing AI: A Transcultural Perspective

What remains to be done is a long and deliberately transcultural history of AI, proposing to confront artistic gestures with the discourses of AI-creating scientists, so as to propose points of comparison, convergence, or divergence between the different discourses specific to the respective territories of art and science. We need to humanize and embody the history of AI, from the female androids that haunt our memories to Turing's gay body and the animals, to show that it is not produced by a deterministic scientific logic, but that it is linked to social, political, cultural and even literary history. It is the occasion to study our old anxieties about replacement by the machine, what Günther Anders called “Promethean shame”, as much as old erotic fantasies.

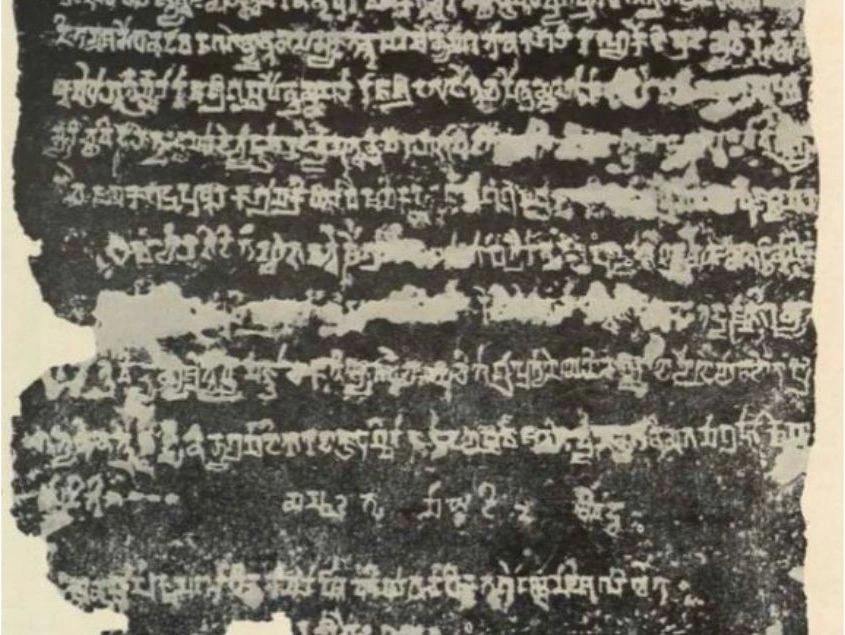

The social history of AI has its roots in the 18th century: Jacquard's loom, the first example of punched cards at the dawn of modern computing, was the site of the first revolt against machines, that of the Canuts in Lyon, who broke the looms in 1831. The tradition of automata fueled daydreams about the magical potential of anthropomorphic machines, but also nightmares about humans being replaced by superior life forms. In this already long history, miracles rub shoulders with tragedy. Charles Babbage uses Jacquard's maps in his analytical machine. Ada Lovelace had the idea of writing the first executable algorithm to perform a series of calculations on punched cards. Perforated cards are central to modern recording and filing mechanisms: in 1928, IBM patented its 80-column cards.

On these cards, alphanumeric characters were represented by rectangular perforations arranged in 80 parallel columns, divided into 12 rows. The cut corners were used to identify the direction of insertion into the card feeder. IBM then acquired the German company Dehomag, which contributed to the census initiated by the Nazis in 1933, during which half a million interviewers went door-to-door. The results were recorded on 60-column cards, punched at a rate of 450,000 per day. For the Nazis, column 22, box 3, designated the Jews. At the same time, Turing was inventing the “bombs” that deciphered the Nazi Enigma codes at Blenchey Park, and the “Colossus”, one of the first modern computers, which was used to prepare for the Normandy landings.

The Paradox of AI and Time

This unwritten history is even more important in that the relationship between AI and time is particularly rich: AI embodies the future of our technologies, but also a kind of end of human time given the advent of eternal, immortal machines. His fictions feature chronological disruptions: time stopped by determinism (Minority Report), a return to the past (Terminator).

But AI produces the future from past data. In this sense, it is fundamentally retrograde and can encourage the politics of inertia, or even reaction. At the same time, it dreams of machines that escape time, eschatologically promising the end of human time when, in the age of “technological singularity”, machine time arrives. Paradoxically (proposed addition), AI generates both fears and dreams.

Its history is not only a “tumultuous one”, as one of its historians, Daniel Crevier, puts it, but also a turbulent one. In its complex chronology lurk the specter of an animist world where humans cohabit with the spirit of things manifesting a divinity, of an immobile present controlled by machines repeating a fixed and deterministic vision of the human condition, and of the aftermath, of singularity or posthumanism, where the human being would be absorbed or even destroyed for better or for worse in the intelligence of machines.

.png)