Hallucinations in Generative AI Models

Hallucinations in Generative AI Models

Creating an "Absolute Truth Machine" remains an elusive goal in AI, and mathematics confirms the inevitability of hallucinations of generative models.

Tracing the Roots: Generative AI Models

Today's Large Language Models (LLMs), which many in the tech community recognize, are a product of a groundbreaking discovery termed "Transformer". This innovation was introduced to the world in 2017 through the paper aptly titled "Attention Is All You Need". However, the journey of generative AI commenced much earlier, rooted in the advancements of discriminative models used in tasks like classification and natural language processing. A pivotal stride in this journey came in 2014 when Ian Goodfellow unveiled the concept of Generative Adversarial Nets. Here, the generator strives to convert random noise into plausible outputs, while the discriminator's role is to weed out less credible examples, receiving rewards for its accuracy. To truly grasp their mechanics, one must delve into the mathematical foundations of these models. These mathematical principles provide a conduit from real-world data to a different dimension — the multi-dimensional realm termed the latent space.

Deciphering the Math Behind AI Hallucinations

Despite the massive scale of modern generative models (take GPT-3 as an example, which demands 800GB for parameter storage), their data generation capacity seems practically limitless. Interestingly, the parameter set's size appears diminutive when juxtaposed with the colossal 45 TB of text data GPT-3 was trained upon. This disparity raises a pertinent question: How does an AI model compress insights from 45TB of text into a mere 800GB, achieving an astounding 98% compression? The secret lies in capturing relationships between distinct concept-vectors within latent space encodings. By retaining just the transformation parameters from real data to latent space and vice versa, we essentially have a roadmap to traverse this transitional realm. Every AI model, in essence, represents the pinnacle of approximation achievable through iterative training processes. This makes the latent space a marvel of precision, yet it remains confined by mathematical boundaries.

Embedded within the latent space are the constraints sculpted by the model's architecture and design. The pre-defined dimensionality of this space sets a limit to its complexity-handling capacity. Additionally, the model's nonlinear operations, indispensable for deciphering complex patterns, bring their own set of approximations, rendering complete data reconstruction a challenging feat. Activation functions, such as the sigmoid or ReLU, mold the information, ensuring it adheres to certain parameters. The transition from data to the latent space and its return is choreographed through matrix operations, biases, and activation functions.

Each neural network layer contributes through its linear mapping, which is then enriched by activations' nonlinear nuances. This intricate interplay allows models to emulate the multifaceted relationships present in real-world data. Training methodologies like backpropagation and gradient descent continually refine these mappings, aiming for the sweet spot between detail retention and overfitting avoidance. Yet, these intricate approximations and transformations, while producing imaginative outputs, can sometimes diverge from factual precision. This gives birth to the phenomena we label as 'hallucinations' — a symphony of creation, albeit occasionally detached from the real world.

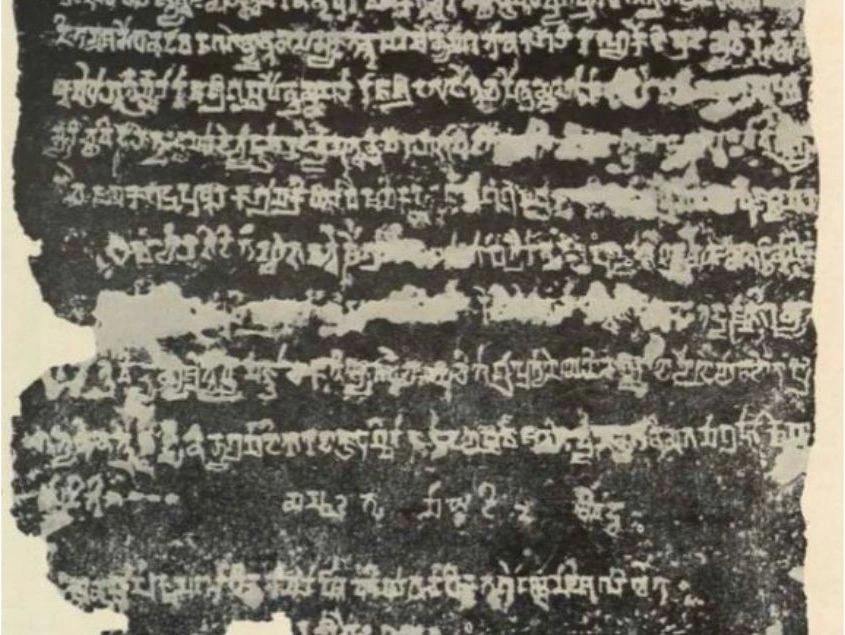

Generative Models and Their Place in Digital Humanities

The ethos of Digital Humanities lies in fusing the marvels of technology with the rigorous methodologies traditionally employed in the study of human culture and history. Yet, the proclivity of generative AI models to produce 'hallucinations' seems at odds with the exacting standards upheld in disciplines such as history, geography, and cartography. This juxtaposition underscores an imperative: the need to evolve Machine Learning methodologies. To truly serve the Digital Humanities, we must prioritize absolute accuracy and precision, even if it means reigning in the unbridled creativity characteristic of generative AI.

.png)