ML and History: Trust and Its Implications

Machine Learning and History: Trust and Its Implications

In the medieval world, trust was big business. Integral to facilitating long-distance trade between parties, its forms and associations allowed new connections – economic and social – to be drawn across cultures. Yet questions of trust are as important to modern-day conceptions of the past as they were to medieval merchants’ emotional responses to their counterparts, both near and far. Someone reading a history book necessarily trusts that the author is acting in good faith, and that their (re)presentation of the source material corresponds to the original.

As part of my role at Historica, I am interested in how new technologies both sustain and disrupt these ways of thinking about the past. For this first article, I want to focus on two particular aspects of this challenge: first, how machine learning (ML) models allow historical analysis to be aggregated across much larger scales than previously; and second, the consequences of this shift for dealing with ‘fuzziness’ in history. In other words, when dealing with the past, how can ML models gain trust as effective arbiters of history?

Machine Learning novelties

Among the touchstones of Western historiography after the Second World War was that history as a discipline is something that historians do; it’s not simply the generalised ‘past’, the span of time up to the present. Scholars make repeated judgment calls about the evidence they use, sifting material as they go, in response to questions they ask of that material (‘what does document X tell us about Y?’). More recent theoretical approaches have adjusted the kinds of critical eye historians use in dealing with written sources, or archaeologists use for material culture: texts and objects are no longer simply routes to establishing a particular objective ‘fact’, to be slotted into a narrative at will, but instead also understood as malleable expressions of earlier preoccupations, prejudices and preconceptions.

In any case, no one historian can grapple with the totality of all the material surviving from the past, much as no one reader (or listener, or viewer – whoever is receiving the information) can absorb that totality in their lifetime. The production and consumption of knowledge is inherently time-limited by these pressures, regardless of attempts to promote notions such as ‘total history’. Until very recently, the primary legitimators of historical knowledge – and people’s ‘trust’ in the generation of that knowledge – have been institutional. Universities, above all, have retained a monopoly on degree-granting powers that providing a theoretical basis for ‘trusting’ the judgment of those with a certain level of educational attainment.

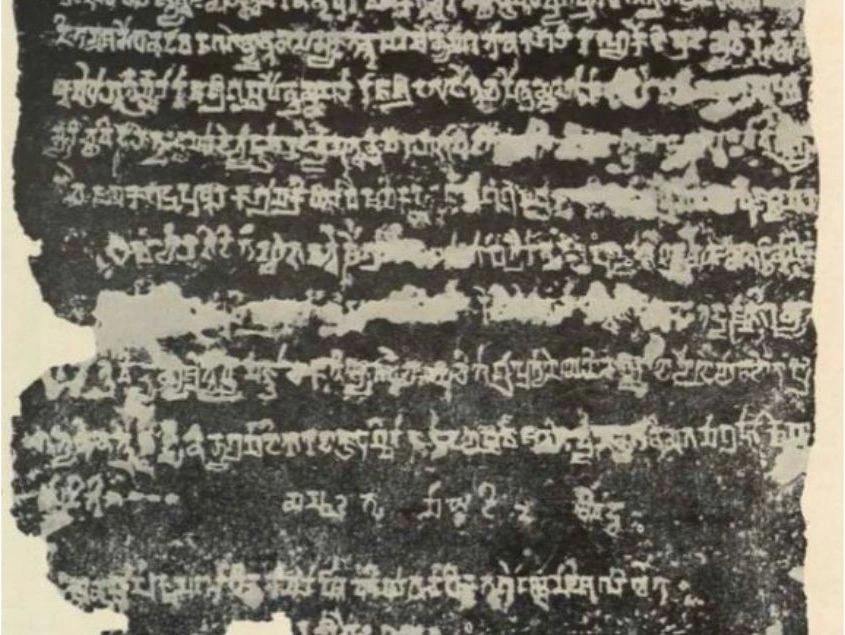

The size of the datasets implied in generative neural networks changes these calculations. As the power of such networks increases, so does their capacity to process and aggregate information more quickly. They also offer an opportunity to diversify the profile of people involved in studying the past: no degree can confer the kind of processing power available to ML models. That processing, however, fails to obviate the question of sifting: what metrics are used to decide that some information is more relevant than other information? What are the documents used to train models? Are those documents primary or secondary to the object of study? Is the model interpreting handwritten text, or translating it into a different language? Trust is crucial here because the historian is human – a human who may be fallible, but can also be held accountable for their decisions about what to ignore and what to include.

Fuzziness in History

Flowing from this, one of the challenges in extrapolating ‘facts’ from data – whoever, or whatever, is performing that extrapolation – lies in what to do with fuzziness. By fuzziness, I mean how discordant pieces of evidence are ‘rationalised’ to decide how much credence is given to them; or rather, how far they can be trusted. A crude example would be if two texts, emanating from two different polities, both claim a particular settlement as under their control. Unlike (for instance) the experimental sciences, the ‘observations’ of the historian are derived from other people’s observations: the experiment of history cannot be run again, to see if it produces a different result. We have to deal with what survives.

There is no single ‘correct’ way for dealing with this fuzziness: if the question posed is ‘who controlled the settlement?’, the historian would need to decide which source to trust. But a different question might be: ‘what do these sources tell us about concepts of control in this period’? In that sense, might both sources be worthy of trust? How a historian deals with this fuzziness can be mapped on to how ML models might seek to ‘fix’ particular ‘facts’ in language through their own reasoning. But how far is it possible to trust the ‘rationality’ of a particular model? And how far does that rationality translate into the apparent certainty of a historical ‘fact’?

Here I’m picking up on my colleague Ivan Sysoev’s blog article last month about how predictive models can ‘hallucinate’ their way to establishing ‘facts’ without any basis apart from their own training. There is an inherent tension here in the production of history. Much of the past is, by definition, empty space: we do not know what happened in a particular place on a particular day. Based on our own experiences in the world, we trust that one of those days did not witness a cataclysm or rupture that would have been recorded. But historians also look for new ways to recover a past that might otherwise be silent, for instance by combining genetics and textual evidence in the study of medieval plague. The point is that entering this empty space still relies on trust: to trust that neither a historian nor a model are ‘hallucinating’ into it.

Future of Machine Learning Models and History

At this stage, Historica’s main interest lies in digital cartography, and how models can be used to generate historical maps. In my article next month, I will explore how this project necessarily engages questions underpinning not only history, but also neighbouring disciplines (archaeology, anthropology, sociology): how boundaries are fixed or fluid; the significance of the ‘border’, not least as a national construct; and the idea of territoriality as contingent, determined by a certain polity’s claims rather than by any outside metric. While these concepts can appear simple, they also hide changing complexities over time. In spatially orienting history, maps are crucial to making sense of the past, offering a snapshot of a particular moment in time. The goal is to ensure that that snapshot can be trusted.

.jpg)