Responsible human-guided AI in extracting and exposing different historical narratives

Responsible Human-Guided AI in Diverse Historical Narratives

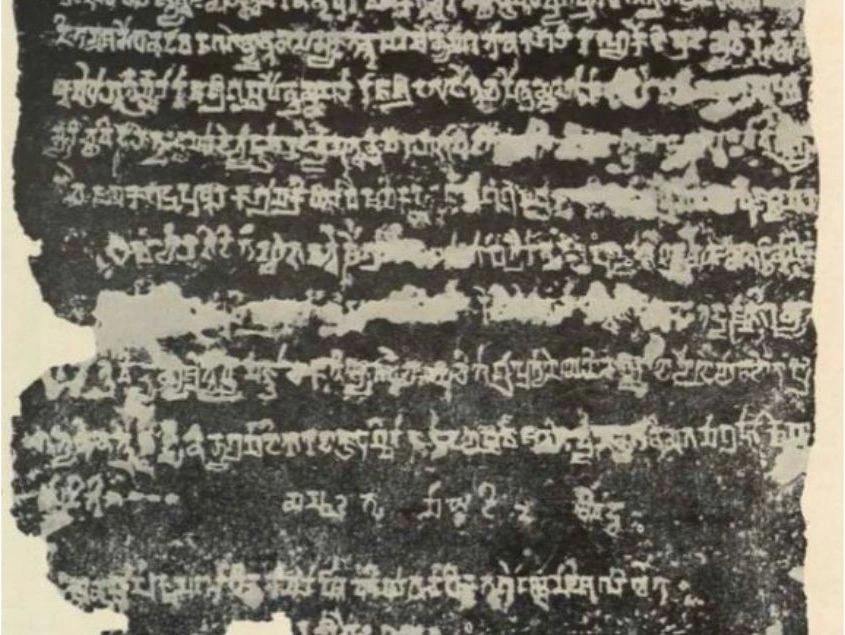

Balancing performance and safety is one of the greatest challenges for AI practitioners and digital humanities practitioners, each will have their own particular stance when it comes to this balance, with substantial implications for the solutions they develop. In this article I share my own stance by describing some of the approaches I take when extracting narratives from school history learning materials, as part of the World School History Project, particularly where digital history tools, natural language processing, and machine learning in historical research intersect.

I should emphasise that the approaches I have chosen to adopt are specific both to this particular use case and to my personal inclinations (in particular my small appetite for risk and desire for confidence), especially when these workflows interact with historical documents and historical data analysis.

Responsible “AI”

I have always been wary of the term “Artificial Intelligence” (or even “intelligence”) because it is one that eludes a consensual definition. I prefer instead to speak/write in terms of capabilities, some of which might be more impressive than others. For example, some are associated with “higher level” human cognition. Developing an AI solution responsibly means developing a solution that reliably exhibits the capabilities it needs to for the specific purpose it is intended for, possibly within digital humanities and historical studies.

- The performance part of this entails ensuring that the outputs/behaviours arising from the system are sufficient for their use case. Within the context of extracting information from text-based learning materials, this might equate to having the capabilities to summarise text, extract historical entities and events, identify topics, and evaluate sentiment, aligning with semantic analysis of historical texts and text mining historical archives.

- The safety part of this entails ensuring that the outputs/behaviours that we do not want to occur are not produced. Within the context of extracting information from text-based materials, this might equate to summaries not containing fabricated content and the system not mis-identifying entities, events, topics and sentiment. Which can be especially critical for data-driven historians.

Decomposing “intelligence” into individual capabilities for transparency

While there has been much excitement and hype around Generative AI and large language models (LLMs), I have resisted applying this approach in extracting information from texts while building a knowledge base (for interested readers who want to learn more about why, you might want to listen to this podcast).

Instead, my approach has been to decompose the problems I want to solve or capabilities I wish the system to manifest into individual capabilities and adopt the most transparent solution possible for each of the individual capabilities when dealing with archival research or historical analysis that requires traceable reasoning.

For example, rather than using an LLM to extract information from history learning materials, I prefer to use more “traditional” natural language processing (NLP) methods such as extractive summarisation (where I can automatically check that the parts of text deemed to be important by the model are truly within the text and not fabricated) and named entity recognition (where I can automatically check that the events, people, and other historical entities identified were truly contained in the text), approaches that fit within NLP-based historical research and digital history projects.

In other words, the models I choose to apply are the ones that I understand. This understanding might be achieved from first-principles reasoning or from having observed its behaviour through rigorous experimentation. Often the most transparent models are also the “stupidest”, in the sense that they address only a single task. But because I can rely on them to perform the way I expect them to with respect to a particular task, I feel far more confident in their outputs and am happier building things on top of them, for historical data visualization and interactive chronological maps later on.

Experimenting rigorously when transparency is not possible

There are times when the capabilities we require demand the adoption of a model that is not transparent. Furthermore, even the simple “stupid” models I have mentioned above can have a black box aspect to them. As a general rule, I treat models that I have not yet experimented with very much as if they were new colleagues that I had not previously worked with. I don’t presume to know to what extent they will misclassify, misidentify, hallucinate, or manifest the human biases of the material they have been trained on. Which is particularly important for anyone working with cultural heritage or interactive world history timelines.

Just as I would hold back from trusting a new colleague with high stakes tasks on day 1 of meeting them, the kinds of tasks (both in terms of domain knowledge and complexity) I would entrust an AI model or system with would depend very much on what I had gleaned from experimenting rigorously with tasks I need them to perform reliably on.

For this reason, I test all models rigorously against a set of human ratings of outputs generated from the model. These ratings indicate only to what extent the machine outputs agree with the resources that they are derived from, but not that they are factually accurate, i.e. if the resource stated a falsehood we would want it to be reflected in the response. This helps to also protect against human bias since the question is not “is this true” (which could easily be subject to biassed responses), but rather “is the model’s output supported by the resources it has seen?”. To scale this, I adopt human-guided machine evaluation to tune an automated solution based on the human ratings (see also this article for more details), a practice aligned with responsible AI in history education resources.

Broadening horizons while laying solid foundations

While I believe there to be much potential for AI to play a significant role in historical research, I hope that by sharing my more cautious approach, I have made it easier for readers to think about and discuss what is at stake when making their own decisions with respect to AI. For my part, I would like to ensure that the foundations we lay for AI applications in this domain are as solid as possible, as these tools evolve toward AI-generated historical maps, historical geography mapping, and future interactive historical maps that support diverse historical narratives.

.png)